Read Stonelake v. Meta for context and full disclosure about personal stakes

Workplace discrimination is rarely just one bad actor making a poor choice — it’s part of a system designed to protect power at all costs. At Meta, that cost included the safety of the very users we were trusted to protect.

As weeks and then months stood between me and my time at the company — and its propaganda, its enigmatic directives, the constant redirect to urgent, critical problems to solve, and the gaslighting to justify a growth-at-any-cost mentality — its now clear that this disregard for women transcended teams, locations, and individual leaders at Meta. It was systemic.

“I had told myself I could do more on the inside than the outside, but realistically, being the grit in the machine wasn’t working.”

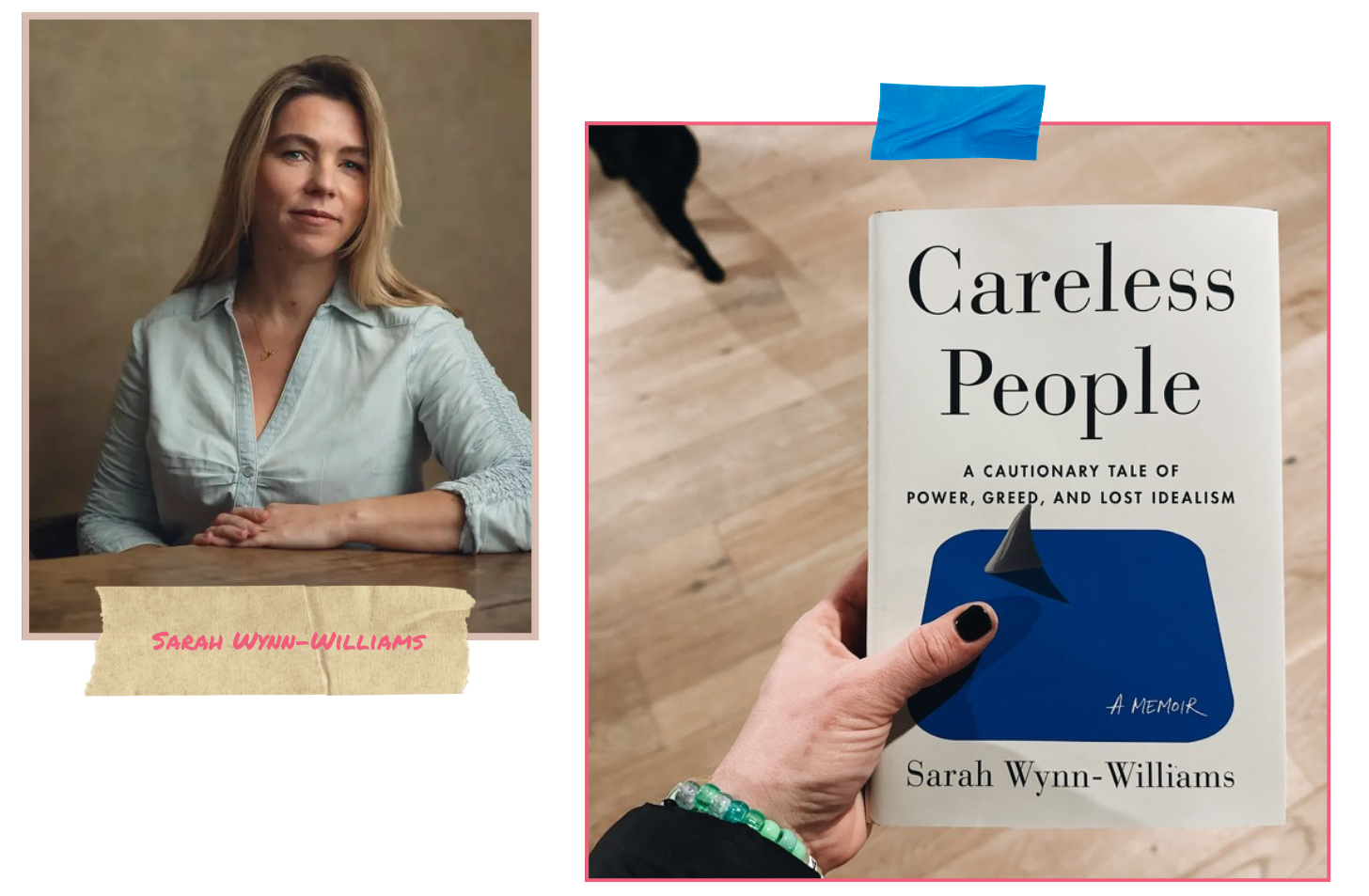

Sarah Wynn-Williams, Careless People

Sarah Wynn-Williams, Facebook’s former Director of Public Policy, detailed her experience within this same system in a memoir, Careless People, released this week with Flatiron Books, an imprint of Macmillan Publishers.

Despite her career history, impressive credentials, and insider status, there seems more chatter about Meta’s efforts to silence and discredit SWW than about the information she’s offered; the information that’s this worthy of extinguishing.

That’s right, the company that just used Freedom of Speech to defend their cowardice has blocked the promotion of Careless People. An arbitrator ruled in favor of Meta, claiming that promotion would cause the company to suffer “immediate and irreparable loss.”

What about the “immediate and irreparable loss” of Meta putting profit before people, or the stories coming to light like mine and SWW’s, detailing how Meta punishes women who call attention to it?

Despite the fact that Sarah Wynn-Williams corroborated concerns that industry watchers have long speculated about, journalists have reported on, and even Meta itself has previously acknowledged, the willingness to question SWW’s competence rather than engage earnestly with the substance of her revelations raises some uncomfortable questions:

Why must we consistently diminish women's authority?

Who are we harming when we do?

First Our Bodies, then Our Ideas

When my boss brazenly put his hand down my pants as I repeated “no,” when Joel Kaplan (Meta exec, Sheryl’s ex-boyfriend, but most widely known for being the guy in the background of his college buddy’s sexual assault hearing) felt safe enough to grind against an unwilling Sarah Wynn-Williams’ after calling her “sultry” in front of a group at a work event, they were disregarding our bodies, clearly. But that’s just the start—

Even if we could end every instance of workplace harassment, systemic bias would still end women’s careers.

Mary Ann Sieghart's book, The Authority Gap, delves into the pervasive issue of women’s thoughts and expertise being systematically underestimated and undervalued across various sectors, irrespective of their qualifications or positions.

"Men are assumed to be competent until proven otherwise, whereas a woman is assumed to be incompetent until she proves otherwise."

Mary Ann Sieghart

In Careless People, Sarah Wynn-Williams describes a situation where the company issued a public denial of their ability to target teens based on emotional state, in the midst of a PR crisis when it was revealed that beauty advertisers could target teens who felt “worthless.”

When an important client called SWW expressing confusion that Facebook would deny targeting tools that are powerful and granular, she turned to VP of Communications and Public Policy, Elliot Schrage, “Relaying the call I had from the ad executive and my own concerns that we’re lying to the public” In Careless People, Elliot’s amused, saying, “if you and he both hate this—for opposite reasons—we must’ve gotten this exactly right.”

Would Sarah Wynn Williams’ concerns about child safety have been taken seriously if instead of “Sarah” she were “Sam”?

Similarly, my warnings about children's exposure to hate speech, sexual harassment, and bullying on Horizon were dismissed, putting vulnerable young users at risk. Leadership knew that in one test, users with black avatars were subjected to racial slurs within 34 seconds of entering Horizon, yet growth was prioritized so desperately that they diverted employees from FTC Consent Order compliance despite the team sounding alarms.

I was denied opportunities and promotions when documenting my achievements would expose the failures of male VPs, after I had been relied on to lead the team through crises they had caused. This didn't happen once; it repeated across different teams, locations, and reporting structures. This repeated until I broke.

Revolving Doors of Women

In the weeks leading up to my medical leave, I reached out to Boz in one of my escalation efforts to alert senior leadership about the toxic dynamics in my organization, after being disregarded and gaslit to the point of a psychological crisis.

While known for being sometimes brusque, I’d also found Meta’s Reality Labs Head and Chief Technology Officer, Andrew “Boz” Bosworth to be authentic and intelligent — I respected him. In 2012, when I was in a housing pinch during a black mold disaster (never rent an apartment with carpet in the bathrooms!), Boz offered me his extra house in San Mateo that was sitting empty pre-construction. While I ended up with a different solution, the gesture touched me. He’d made mistakes as a leader, but he fixed them — or at least I believed so much.

In January 2023, I write to Boz in a Workplace message:

“I’m concerned that I continue to see senior women leaving in droves (4D+ women in RL on medical leave or left team in last ~month)… I have never had women on my team observe the boy’s club dynamic, be crushed by it, and ask if they should factor this dynamic into their career track here… From a business standpoint, I lose confidence that we will be able to be successful with this level of organizational toxicity…”

I close with a parable, something I’d heard from the recently appointed Horizon Product Leader, Gabe Aul: “Culture is what we encourage, punish, or ignore. I’ve been thinking a lot about the ignore part. Hope you are too. xx”

Boz responded later that day.

“My staff is one third women as it has been for some time and while we have lost women from that group we have added them in equal measure,” he says before adding: “However, it is clear in your specific circles there is a major shift afoot…”

Meritocracies, like Rainbows and Unicorns

Boz's response exemplifies a dangerous cliche in tech: when confronted with systemic gender bias, leadership points to representation statistics rather than addressing the toxic culture. This defensive redirection is part of a larger mythology in tech: the belief in meritocracy.

Representation is never enough on it’s own because meritocracies are myths. Many choose to ignore the data and instead believe we can simply wish equity into existence.

Sam Lessin and Joe Lonsdale — who are notably both White VCs who live in California and went to Harvard and Stanford — recently launched Merit First, featuring a manifesto that begins with “American companies are in a crisis,” and ends with “… we choose meritocracy as our path forward.”

I’ll summarize: they’ve built a more efficient pipeline to inequitable workplaces.

Skills-based hiring creates a pathway, but what happens after they get a job?

What about the systemic biases that show up in promotions, leadership opportunities, workplace culture, and retention? The long mythologized garage-to-greatness story is deeply embedded in Silicon Valley's identity: a competitive marketplace where only the best code wins. But that’s not what happens.

With women representing only 19% of developers, research from GitHub's 12-million-person community reveals that code written by women is accepted at higher rates than code written by men – but only when gender remains hidden. When female developers' gender becomes visible, acceptance rates drop below those of their male counterparts.

Without counter-bias measures, even the most meritorious hiring practices feed people into the same broken system where bias will dictate whose careers and ideas break through. Unmanaged bias has already proven to undermine product market fit and negatively impact tech products:

Google’s PR department spent years apologizing for a photo-labeling system that misidentified Black people as gorillas

Nikon’s “smart” digital cameras faced backlash for flashing “did someone blink?” messages when photographing Asian people

Object-detection software failed to recognize dark-skinned individuals in virtual reality games, and more dangerously, in the models designed to prevent self-driving cars from hitting pedestrians

The Urgency to Debias AI

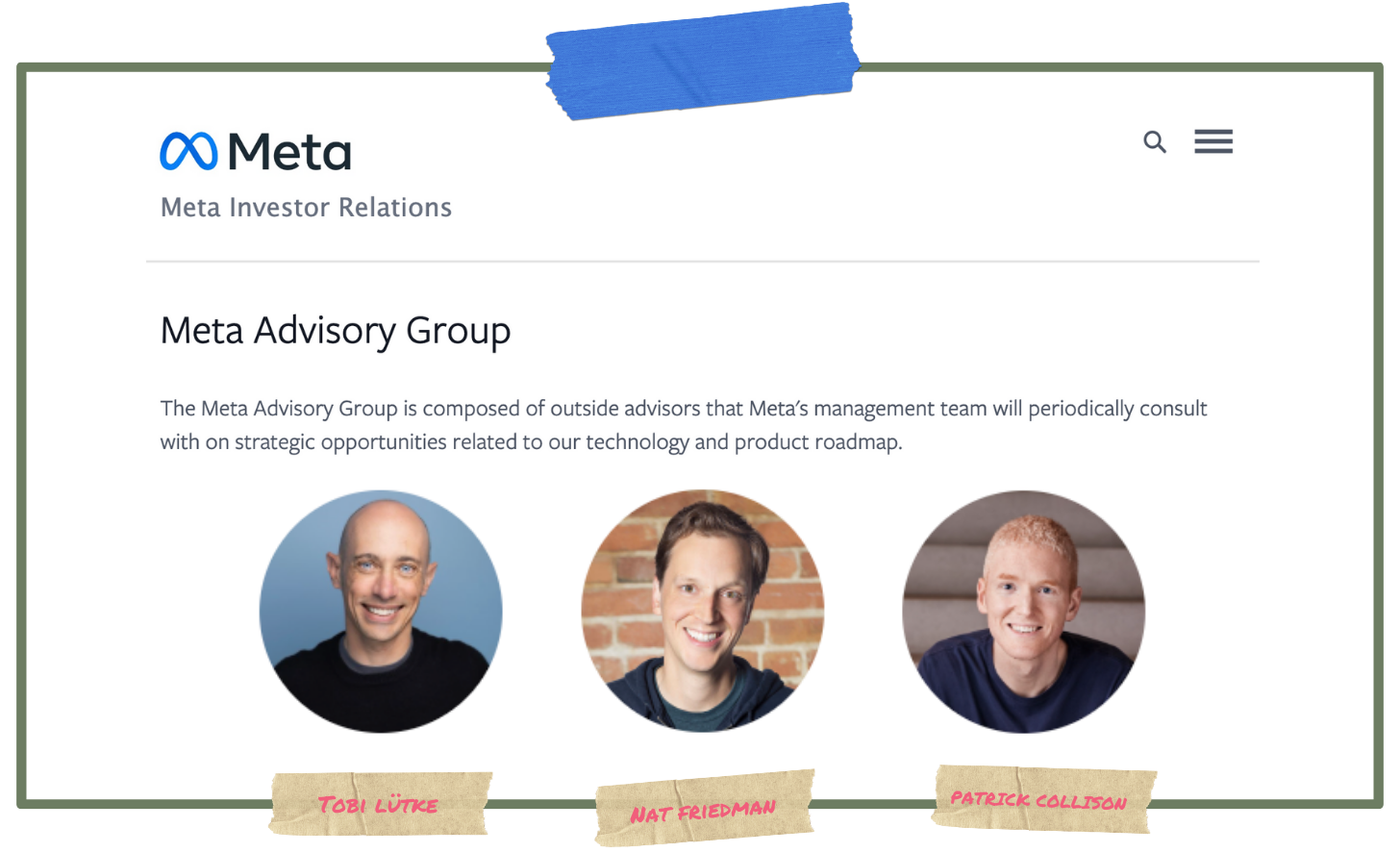

The future is being built by an industry where under 20% of AI VC funds went to companies with a female founder, only 10% of AI researchers at Google are women. When they created an AI advisory council, Meta appointed exclusively white men with no ethicists or researchers.

AI systems, by nature, reflect the data and perspectives they are trained on, which means homogeneity in development leads to blind spots that can reinforce biases, exclude vulnerable populations, and amplify systemic inequities.

“Anyone who has worked closely with technology can tell you… how technology can create unexpected harms. That’s one thing when it’s a social media platform that serves over three billion people. It’s another when it’s a second-strike capability in the South China Sea. That is, the automated ability to launch a devastating nuclear retaliation after absorbing a disarming first strike.”

Sarah Wynn Williams, Careless People

Technology offers to improve lives at unprecedented pace, and in the same sense it can cause destruction at scale.

Sarah Wynn-Williams shares in her epilogue that she’s been drawn to AI Policy lately, “which is wild with geopolitical and policy issues.”

And she helps us understand “some of the most fundamental existential questions: Should a human be involved before nuclear weapons are triggered? Should lethal or nonlethal AI weapons operate under human rights oversight or control? or What rules need to be in place to avoid inadvertent escalation with the use of AI enabled weapons systems in Warfare? All questions humans have never had to deal with before.”

Durable solutions acknowledge biases exist and prevent them from determining outcomes

Imagine this enterprise cybersecurity proposal: “Despite evidence that we must safeguard the company against digital threats, our plan is to just train our employees to do the right thing once or twice a year.”

Yet that’s exactly what we’re doing when we pour $8 billion a year into DEI trainings and calling it enough. It’s not enough.

A mature risk management culture ensures all employees feel safe and are safe to voice concerns.

Technology and AI leaders taking this risk and responsibility seriously will:

Prioritize DEI as a core business function, connecting outcomes to executive compensation

Obtain third-party certifications such as the GEN Certification, which evaluates companies across 200+ indicators to prove they’ve gone beyond empty promises to meaningful, measurable action

Track and report on hiring, firing, pay, and promotions for protected identities and at the intersections of these identities

Support legislation calling for whistleblower protections like the “Silenced No More Act” (SB 331) — co-sponsored by Ifeoma Ozoma, who experienced discrimination in tech first-hand. SB3 331 prohibits employers from using non-disclosure agreements (NDAs) to silence employees who speak out about harassment or discrimination

Culture is What You Reward, Punish, and Ignore

When it didn’t seem I was getting through to Boz in that January 2023 Workplace chat, I clarified:

“I am not concerned so much about representation vs. what the experience is like for the women representing,” I type. “I’m not trying to stage a take down of an individual or individuals. It’s more that it seems even those behaving badly aren’t grasping that with so many behaving badly at once, and often in coordination, it turns into one of those ‘work twice as hard to go half as far’ things. I’ve been through a lot in 14 years… and here I am stronger and more confident and capable than I have ever been, getting eating up and spit out. It just worries me deeply.”

After encouraging him to reach out to the other women who had recently departed or were on leave and asking for their candor, I said:

“You know how much I love and appreciate how human and kind and real you are. You are one of my Facebooks in a sea of Metas. And Facebooks doesn’t necessarily mean tenured. They just mean, connected to the core of who we are as a company, and here to build the best products, period.”

He gave my message a “care” emoji and said, “Well I'm pretty sure I know one of the four women you are referring to, but I don't know if I have the full list. This is something I will follow up on, of course.”

He didn’t, he did nothing as far as I can tell.

Sarah Wynn-Williams describes a similar effort on her part, just before she was discarded, too. “All through the conversation, [Elliot’s] careful to say very little. I tell him it’s not just me. It’s a much broader problem at the company. ‘I need to understand what has gone on here,’ Elliot responds, which is surprising because he knows exactly what’s been going on. Elliot turns his back to dismiss me and the conversation is done.”

When does ignorance become negligence?

Ignorance becomes negligence somewhere far before joining the C-suite of a powerful and influential global company worth $1.5 trillion and reaching over 3 billion people every month.

SWW’s experience of watching from inside as immense power and wealth led to ethical compromises and a departure from the company's original ideals is consistent with mine.

Amnesty International confirms Meta’s culpability in Myanmar, Senate hearings display the destruction of teen suicides, and SWW’s recent SEC filing calls attention to Meta’s willingness to compromise safety for power in China.

“None of the senior leaders thought about this enough to put in place the kinds of systems we’d need, in Myanmar or other countries. They apparently didn’t care. These were sins of omission. It wasn’t the things they did. It was the things they didn’t do.”

Sarah Wynn-Williams, Careless People

Billionaires hand-waving about innovation and efficiency and meritocracies is performative idealism that’s hurting the products we build and the people whose lives we claim to improve.

We need countermeasures, we need truth tellers, and we need safeguards — and not those built, bought, or brokered by billionaires.

“Instead of fixing these things, this ongoing suffering they have caused, they seem indifferent,” Sarah Wynn-Williams reflects my experience, too, “They’re happy to get richer and they just don’t care.”

“Women have left but we have replaced them in equal numbers” is just so freaking mind-boggling. Like, “we literally don’t care because they’re all interchangeable.” Just wow. Thank you for this Kelly.

Yeh, one of the first questions I asked the first woman I met who worked in Meta was "how many women do you work with in proportion to men?" Her answer was very PC and clearly a dodge, which made me feel sad and worried. I was seeing constant evidence of Meta's tech-bro culture inside of Horizon Worlds. Eventually I closed my practice and took a contract with Meta because I was so confused and concerned about their lack of actual DEI efforts in the community. I wanted to understand where their priorities were and what the hang-up was with implementing the assertions that Meta kept spinning on DEI. I got my answers, and fucked up my life in the process of finding them. Thanks for speaking out.