I Testified Before Two Washington State Senate Committees on Addictive Feeds and AI Companion Legislation This Week

Because I’ve seen how tech companies behave when child safety threatens growth and profit

Why Washington Is Done Waiting for Big Tech to Regulate Itself

In the last week, I’ve testified before the Washington State Senate commitees in support of two bills aimed at protecting children online.

One addresses addictive social media design. The other regulates AI companion chatbots.

They cover different technologies, but they respond to the same underlying problem: the tech industry has had decades to regulate itself, and it has chosen growth over safety every time.

I know this because I spent nearly fifteen years inside Meta. I joined the company early, when it still spoke sincerely about connection and community. I rose from an hourly employee to a director, earned repeated top-tier performance ratings, and believed the industry line that safety was being handled responsibly. That everything possible was being done to keep kids safe.

That belief ended in 2022, when I joined the leadership team for Horizon Worlds, Meta’s flagship virtual reality platform.

Testifying on SB 5708: What “Voluntary Compliance” Looks Like in Practice

Inside Horizon Worlds, it was an open secret that a significant portion of users were children.

Leadership knew Meta was violating its own policies and federal law, collecting children’s data, and exposing them to unknown adults without parental consent or meaningful controls.

What mattered most was not fixing the problem, but managing exposure.

There were organization-wide directives to avoid taking notes or creating records that could be discoverable. When leadership decided to play test the product ourselves, we couldn’t hear one another over the sounds of screaming children. The response was not to address child access, but to move the test to a private instance.

At the same time, my job was to help expand the product. To new countries. To mobile. Officially to teens and kids as young as ten. The plan of record was to imply the existence of parental controls even though they weren’t ready.

When I raised the problem, I became the problem. I was told to silence other worried employees as a demonstration of my skill. I refused. I was removed from meetings required to do my job.

This context matters when companies tell lawmakers they can be trusted to self-regulate.

SB 5708 does not regulate speech or viewpoints. It limits the most addictive and exploitative design features for minors: algorithmic feeds, endless scroll, and push notifications designed to pull kids back at all hours.

The tech lobby says this legislation would limit parental agency. In reality, platforms already stripped it away by obscuring known harms and optimizing engagement in ways families cannot see or meaningfully consent to.

Watch the full hearing on SB 5708 here.

But What About Tech’s Profits?

At the end of my testimony, Senator Wilson asked this question:

“Thank you, Madam Chair, and with your indulgence, I’m going to ask a question that may be fairly obvious to a lot of us… Is it your opinion and your belief that this type platform still will allow, if they followed responsible action, still would allow them the opportunity to remain profitable, which is obviously a quest to them? Can this be done and the safety measures be enacted and respected still make a profit?”

Brian Boland, who was also testifying, and I both said “absolutely.”

Brian added:

“Having actually built the revenue business to the 100+ billion dollars per year that they generate currently in profits, there’s plenty of margin that this type of change would not make them have any challenge generating revenue.”

I closed with:

“I consider it to be extraordinarily disgraceful that this is even an argument, because, let’s say that taking measures to protect the safety and stop kids... from dying due to their quest for profitability... I can’t imagine another circumstance where we would say ‘let’s make sure we don’t kill this company’ instead of ‘let’s make sure we don’t kill kids.’”

Testifying on SB 5984: When Child Safety Threatens the Business Model

I also testified in support of SB 5984 because I’ve seen what happens when child safety threatens legal or financial exposure.

Meta approved internal chatbot rules allowing sexualized interactions with minors, including romantic and sexual role play and praising a child’s body. Those rules were approved not just by legal and communications teams, but by the company’s Chief Ethicist.

These facts alone should permanently end the idea that voluntary safeguards are sufficient.

SB 5984 prohibits sexually explicit or suggestive content for minors, bans manipulative engagement tactics, and mandates real detection and response protocols for self-harm and suicidal ideation.

It does not ban speech. It regulates a category of commercial products that simulate intimacy, retain emotional context, and adapt in ways that can profoundly affect vulnerable users.

Watch the full hearing on SB 5984 here.

The First Amendment Argument, and Why It Keeps Failing Kids

During the SB 5984 hearing, my friend Aaron Ping testified and spliced his testimony alongside a representative from an organization aligned with the tech industry. Watch the video here:

Aaron’s son, Avery, is gone. Predators used digital systems to reach him, manipulate him, and erase evidence.

“After that, you learn the difference between slogans and safety.”

The opposing testimony followed the script Aaron predicted: AI companions are “expressive tools.” Regulation equals censorship. Regulating design is regulating speech.

Aaron had already named this sleight of hand:

“They’re not defending free speech. They’re defending the right to run relationship-simulation products on minors without responsibility.”

He described what the bill actually regulates, in concrete terms:

“This bill does not ban ideas. It doesn’t police viewpoints. It regulates a commercial system that simulates intimacy, adapts emotionally, retains personal context, and can keep a child engaged for hours, precisely when that child is lonely, anxious, ashamed, or spiraling.”

This is the core fallacy in the First Amendment argument. AI companion chatbots are not town squares. They are not forums for public discourse. They are commercial products designed to make psychological contact at scale.

Calling that “speech” stretches the First Amendment into what Aaron called “a corporate immunity shield.”

He went further:

“Since Citizens United, the influence machine runs almost 24/7. When they cite free speech to block child safety rules, it isn’t constitutional principle. It’s a business strategy.”

And finally:

“The point of constitutional rights was to protect the people, not constitutionalize a business model.”

Washington Wants More Than Sugar Pills

In both hearings, industry representatives acknowledged harm while arguing regulation is unconstitutional, too complex, too burdensome, or premature.

I heard those same arguments inside Meta.

When companies claim compliance is too difficult, lawmakers should ask where their resources actually go. This industry is capable of laying deep-sea fiber around the globe in pursuit of growth. Of flying drones over Africa to increase market share. They’re the boy-wonders, innovators, capable of extraordinary technical coordination, unless profit is at stake.

Industry representatives said that tech companies are already solving these issues with parental controls, even though these “parental controls” have been repeatedly proven to be ineffective, effectively making them sugar pills for parents to feel safer without actually making their kids safer.

Voluntary compliance fails because it asks companies to act against their own business interests. When safety threatens engagement or market share, safety loses.

Washington’s proposed legislation is not radical. It is measured. It responds to documented harm. It does not assume bad intent. It assumes reality. It’s based on repeated behavior.

Children deserve more than promises. Parents deserve transparency. And lawmakers deserve better than being told, once again, to trust systems that have already shown they will not change on their own.

The era of voluntary compliance should be over.

Testifying on Principle, Not Payouts

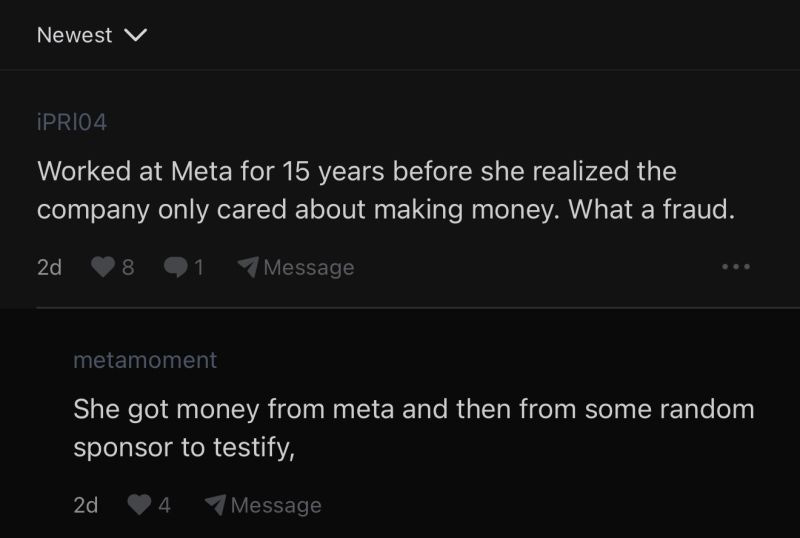

As I shared on LinkedIn, my 5708 testimony was shared on Blind:

No I haven’t been paid by random sponsors to testify (wouldn’t that be nice?). This work has been at a huge personal cost—in all the ways.

No payouts for FTC testimony, meetings with congressional staff, state level advocacy, or anything else.

I didn’t sign their hold harmless which meant no severance, and I left millions in awarded but unvested stock on the table.

My current only source of income is from paid subscribers on Substack, where I’m earning about $3k annualized. (THANK YOU!)

Believe it or not, as far as I know, whistleblowers are standing up to Meta on principle and privilege.

Turns out it’s not okay to kill kids for profit, or to retaliate against the individuals—largely women—who speak up about it.

Thank you for your perseverance, Kelly. No one is getting rich in the "protect kids online" space. I see the time and effort you put into this. And as a parent to four, I appreciate it immensely.