To the Good People Who Built Harmful Tech

We built the platforms. Now we can help pass the laws to protect the children using them, because Big Tech won't.

When I started working at Facebook, Alex Peiser was an 8-year-old. He loved his friends, family, and youth group. I didn’t know Alex, but I thought I was going to make his life better; I thought I was joining a company that was going to make everyone’s life better.

Like many tech workers, I genuinely believed I was making the world more open and connected. I thought we were making media more personal, more meaningful. I praised and proselytized the power of using behavioral targeting and algorithmic delivery to drive awareness, consideration, intent, and action. I thought this new economy would change the world, but I didn’t fully grasp how.

In 2017, Alex experienced a breakup and went to Instagram looking for songs and content to help him cope. Over the course of three days, the 17-year-old theater-kid and Boy Scout was served over 1,200 pieces of content that glorified suicide and told him that he would never be loved, would always be alone, and that he should kill himself. And then the products that we built to drive influence drove influence.

From awareness, to consideration, to intent, to action.

Alex is one of thousands — the Social Media Victims Law Center (SMVLC) based in Seattle is currently handling over 4,000 cases of children harmed by social media.

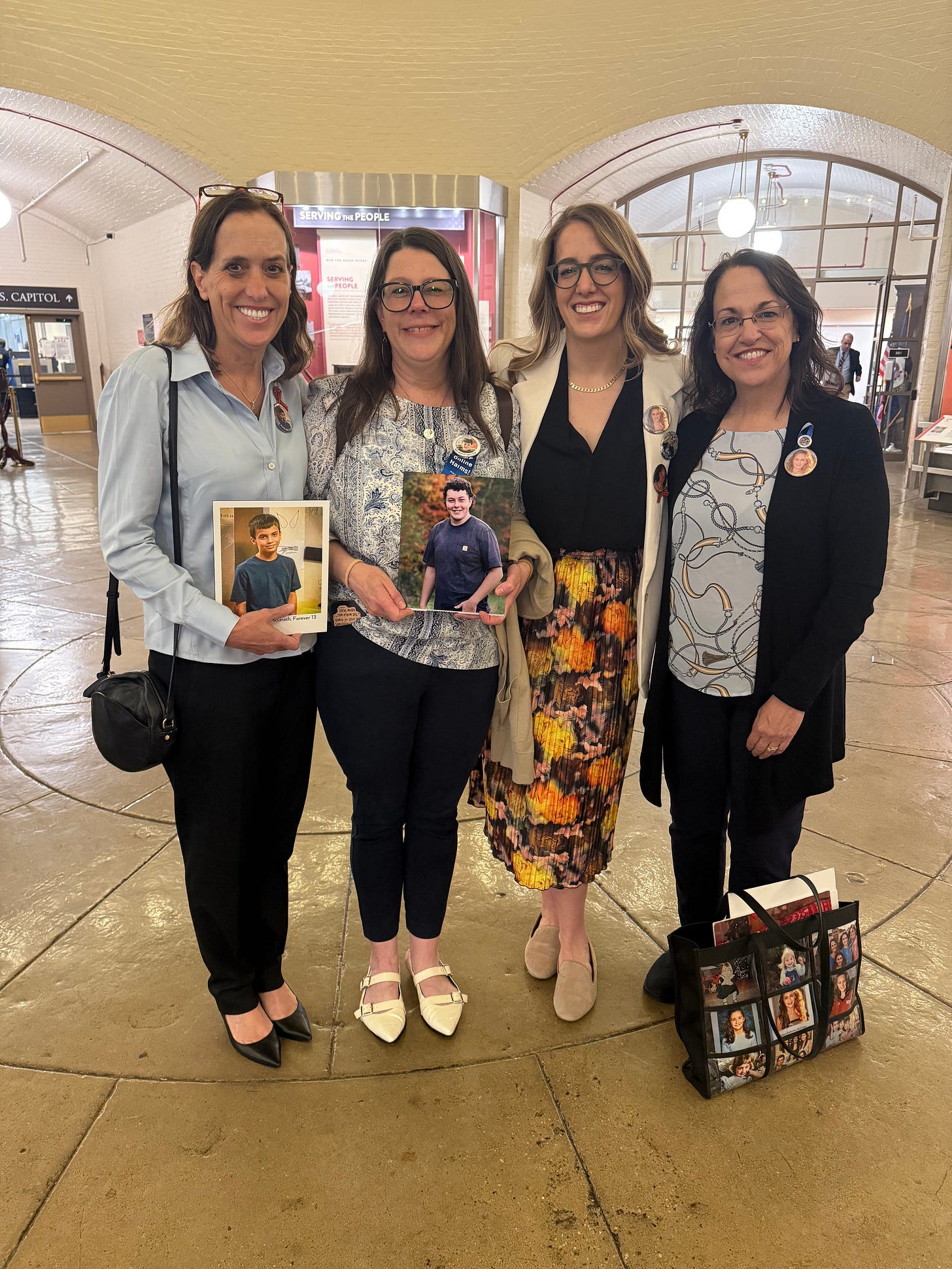

Photo by Brendan Smialowski for Getty Images, ParentsSOS members holding up photos of their lost children during Zuckerberg’s 2024 Senate testimony. This issue continues to pick up steam. I was in Washington, D.C. this week to attend a workshop hosted by the Federal Trade Commission on the attention economy, exploring social media harms to children and teens. I also had the chance to get to know and later hit The Hill with advocacy groups like Digital Children’s Alliance, Fairplay, and ParentsSOS, a group of parents united by the unimaginable loss of a child to social media harms.

While in D.C., I joined Fairplay and ParentsSOS for a screening of Can’t Look Away, a documentary film where directors Matthew O’Neill and Perri Peltz explore SMVLC’s high-stakes legal efforts to hold tech companies accountable for the harm they cause. Based on investigative reporting by Bloomberg News’ Olivia Carville, Can’t Look Away explores the urgent need for industry reform and serves as both a wake-up call about the dangers of social media.

I learned so much and urge you to watch it. ParentsSOS’ Amy Neville offered a link to view the film at a sliding scale from $0 per ticket—but please purchase the film if you’re able.

Families like Alex’s have little to no recourse and social media companies are largely immunized against accountability for harms their products cause, as they currently hide behind legislation that was written into law before MySpace existed: Section 230 of the Communications Decency Act of 1996.

After the screening, I took a 90-minute speed walk in flats that had already burned blisters, and experienced a profound sense of guilt and sadness. The company that I joined in 2009 would have been the first on The Hill fighting for necessary legislation, and today they spend millions trying to dodge it.

Their abdication doesn’t need to be ours, and those of us who understand products like behavioral targeting, features like infinite scroll, who are familiar with Big Tech’s privacy-aware identity verification capabilities, the technical barriers and opportunities to legislation — we are needed in this fight.

Companies are using words like "free expression," "innovation," or "user empowerment” as smokescreens for profit. And now, as momentum grows for laws like the Kids Online Safety Act (KOSA) and App Store Accountability Act (ASAA), Big Tech is spending millions to stop them. They’ve mobilized well-funded lobbying teams and front groups to paint regulation as censorship, when in truth, it’s accountability they fear.

What Kids Are Up Against

Mental Health Impacts (Depression, Anxiety, Suicidal Ideation)

Surgeon General Warning: In May 2023, the U.S. Surgeon General cautioned that social media can pose a “profound risk of harm” to youth mental health. Teens who use social media more than 3 hours per day have been found to face double the risk of depression, yet the average teen’s use now exceeds 3.5 hours daily.

White House Asserts “Undeniable” Relationship: Adolescent mental health problems have surged over the past decade. By 2021, 42% of U.S. high school students felt persistently sad or hopeless (up from 28% in 2010). While multiple factors are at play, the White House notes “undeniable evidence” that social media has contributed to this youth mental health crisis.

Documented Links to Depression & Suicide: Large-scale research is increasingly affirming correlations between social media and mental distress. The CDC’s 2023 Youth Risk Behavior Survey found frequent social media use (several times a day) is strongly associated with higher rates of persistent sadness/hopelessness and having considered or planned suicide.

“Rabbit Hole” Effect (Self-Harm and Suicide): Investigations by Amnesty International in 2023 highlighted how TikTok’s For You Page can rapidly pull children into “rabbit holes” of depressing and self-harm content. In Amnesty’s test, they created accounts as 13-year-olds and “liked” some mental health videos; within 20 minutes, over half of the TikTok feed was filled with content about mental health struggles, including multiple videos that romanticized or encouraged suicide. Over an hour, the algorithm kept intensifying the stream of depressive content.

Addictive Design and Compulsive Use

Designed to Hook Kids: Social platforms employ design techniques (endless feeds, auto-play videos, constant notifications, visible likes, scores/ratings, etc.) to maximize user engagement. The White House warns that platforms “use manipulative design… to promote addictive and compulsive use by young people to generate more revenue.

“Instagram and other apps are designed to keep people using them for as many hours as possible… meaning a collision between what’s good for profit and what’s good for mental health.”

- Psychologist Jean Twenge

Compulsive Use & “Digital Addiction”: Over 20% of adolescents met criteria for “pathological” or addictive social media use, with an additional ~70% at risk of mild compulsive use. Teens themselves often recognize the problem—many say social media makes them feel “addicted” or unable to stop scrolling, even when it negatively affects their mood. The Surgeon General highlighted that teens commonly report social media makes them feel worse “but they can’t get off of it.”

Compulsive behavior is reinforced by product design: app features are often deliberately tuned to exploit the brain’s reward pathways (similar to gambling mechanisms) and are particularly dangerous for children and teens.

Sleep Deprivation and Attention Issues: Nearly 1 in 3 adolescents report staying up past midnight on screens (typically on social apps). Chronic late-night usage contributes to sleep deficits, daytime fatigue, and trouble concentrating in school.

Always Online Culture: 95% of teens are on social platforms, and ~36% say they use them “almost constantly” – rarely unplugging. This “always online” culture, fueled by persuasive design, can crowd out offline development and amplify mental health strains.

Viral Challenges: Beyond self-harm, algorithms can amplify violent challenges or hateful content. There are many cases of dangerous viral “challenges” that carry devastatingly harmful consequences proliferating among kids (e.g. choking/fainting challenges, etc.) primarily because algorithms boosted those videos’ visibility once they gained traction.

Bullying and Online Harassment

Prevalence of Cyberbullying: According to the CDC’s latest Youth Risk Behavior Survey, 16% of U.S. high school students in 2021 reported being cyberbullied in the past year. Among girls the rate was higher – about 1 in 5 (20%) of teenage girls were cyberbullied in 2021. LGBTQ+ teens are especially at risk: over 27% of LGBQ+ teens reported cyberbullying victimization.

Emotional and Psychological Harm: Victims of cyberbullying frequently suffer heightened depression, anxiety, loneliness, and low self-esteem. Notably, youth who are harassed or humiliated online are at significantly greater risk of suicidal ideation and attempts.

Grooming and Online Predators

Rising Grooming Crimes: The proliferation of social media has been linked to a sharp rise in online grooming and sexual exploitation of minors. UK data shows 150 different platforms were used in grooming crimes, with the majority occurring via major social networks. Over 73% of cases involved either Snapchat (26%) or Meta’s apps (Instagram, Facebook, WhatsApp – 47%).

Global Exploitation Surge: Internationally, child protection agencies report unprecedented levels of online sexual exploitation. Surveys in multiple countries estimate that up to 1 in 5 children have been subjected to some form of online sexual exploitation or solicitation within a single year.

Law enforcement (e.g. the U.S. ICAC Task Forces) have conducted hundreds of thousands of investigations involving predators using social platforms to lure minors. Yet the sheer volume of incidents far outstrips current policing capacity.

Platforms Enabling Abuse: A 2023 Wall Street Journal exposé showed Instagram’s recommendation algorithms “connect and promote” networks of pedophiles. Simply viewing one deviant account caused Instagram to start suggesting numerous similar ones (“even glancing contact… can trigger the platform to recommend users join it”).

Body Image Issues and Disordered Eating

Negative Body Image and Social Comparison: Body image concerns affect over half of adolescent girls, and social media is a known contributor.

Instagram’s Toxicity for Teen Girls: Facebook’s internal research (2019–2020) found that Instagram in particular was toxic to many teen girls’ body image. One in 3 teen girls in their studies said Instagram made them feel worse about their bodies when they were already feeling insecure. Participants blamed Instagram for magnifying appearance-driven anxiety and eating disturbances.

Pro-Eating Disorder Content: Social media algorithms have been known to amplify harmful content like pro-anorexia (“pro-ana”) or extreme diet postings. A 2023 study found TikTok’s algorithm “might exacerbate eating disorder symptoms via content personalization,” essentially creating a rabbit hole that reinforces disordered eating thoughts.

Critical Legislative Efforts to Join

I’m not willing to accept that Big Tech “can’t solve these problems” while they manage to put entire computers into a pair of sunglasses and to fly drones over Africa to give rural villages access to Facebook.

Common sense legislation will force companies like Meta to take their responsibility to safety as seriously as they take their responsibility to shareholders.

The Kids Online Safety Act

The Kids Online Safety Act (KOSA) is a bi-partisan bill that requires social media companies:

Provide basic safeguards and tools for parents and kids

Default kids to the most restricted privacy settings

Adhere to a duty of care, a responsibility, not just to their shareholders, but also to their users

This duty of care—a standard that requires social media companies to proactively prevent and mitigate harms to minors on their platforms—is at the heart of KOSA. This isn’t a radical or punitive concept; it’s a common-sense expectation applied across countless industries that impact children’s well-being, from car seat manufacturers to toy designers. For too long, tech companies have operated without this basic responsibility, even as their products became deeply embedded in children’s daily lives. KOSA’s duty of care provision means platforms would no longer be allowed to prioritize engagement and profit over safety without consequence.

KOSA also establishes a transparency standard by which academics or vetted third parties could access information about documented harms and reports and deaths caused by social media sites and kind of in conjunction with their products, and hold those platforms accountable to respond to that data.

If you, like me, helped to build these products, and you know the intent and effect, or if you've seen profit be put before safety, now is the time to speak out in support of KOSA. We can't go back and undo the harm that these products have caused, we are uniquely positioned to speak out, and as the folks who built these products, demand for common sense tech regulation in order to keep kids safe.

The App Store Accountability Act

Another critical piece of bi-partisan legislation to discuss and rally behind is the App Store Accountability Act (AA.

Today, developers in Google and Apple's app stores set their own ratings. Apple does not adequately verify, exemplified by a search for "nudify" apps in the Apple App Store -- many are rated for 4 year olds. Why would Apple make a change, when they earn 30% of every dollar spent downloading these apps?

You'd think protecting kids would be enough, but it's not.

Additionally, parents are not adequately informed of risks, such as mechanisms for adult strangers to interact with their kids, or features designed to maximize engagement, like algorithmic feeds, infinite scroll, or features like Snapchat's "Snap Score," "Quick Add," and "Snap Maps," creating a pathway for drug dealers to groom kids and then locate them.

Parents report strategies like the use of a secondary device to access restricted apps, deleting and re-downloading, creating new e-mail addresses to set up a new iCloud, all of which can be done without parental involvement or age verification.

Many bereaved families I met this week had rules at home like "Internet goes to bed at 10pm" or "Instagram is only accessed on the family computer" but ultimately were helpless against an ecosystem designed to maximize profit, while they and their kids are blamed.

Google and Apple want age verification to happen on an app-by-app basis; every developer to be responsible for their own age verification solution. This would shift the burden to independent developers who are already paying app stores a significant commission of every dollar earned. Their proposal is also unnecessarily complex, easier to abuse, and anti-innovation. And it won't adequately protect kids.

It's all about profit preservation for the biggest players, regardless of the loopholes and harms potential it creates for kids. It's outrageous.

The App Store Accountability Act requires:

Clear and accurate app age ratings

Secure age verification

Effective parental oversight of downloads, purchases, and usage

Additionally, the bill:

Requires annual certification of these safety standards

Offers parents a straightforward mechanism to report ineffective safety measures or inaccurate age ratings

Explicitly prohibits the sale or misuse of children's age data

As I said in this week's congressional briefing:

“App Stores are the perfect chokepoint: they know how to collect age data and they already control every download. Silicon Valley celebrates leveraged opportunities for impact and The App Store Accountability Act is just that.”

We must tie accountability to profitability -- there is simply no excuse for banking billions while children die and parents beg for help.

Other Resources:

More on KOSA from sponsor, Senator Blumenthal here

More on the ASAA from sponsor, Senator Lee here

Read The App Store Needs to Grow Up by Lennon Torres of Heat Initiative

Watch Meta whistleblower, Arturo Bejar’s, Senate Testimony

Listen to Nicki Petrossi’s interview with Meta Whistleblower, David Erb

Digital Childhood Alliance’s ASAA FAQ here

Fairplay’s legal analysis of KOSA here

A Message for Grieving Families and Allies in Tech

In closure, and as an invitation, I want to relay the remarks I prepared and read to ParentsSOS members the morning before we hit The Hill together.

When I joined Meta in 2009, you had children, toddlers and newborns.

I believed I was going to make their world better. I believed I was going to create opportunity and foster connection. I believed leadership, experienced proof points, and naively, I believed the internal propaganda about our ambitions and priorities and values to be genuine.

When the veil of ignorance finally lifted, and I was expected to put the company’s profit before the safety of children, I did the right thing. But the truth that I now can’t unsee is that I spent 14 years building a company, an industry, that won’t acknowledge what you deserve to hear, your families have been robbed in the name of corporate greed.

I feel tremendous guilt for the role I played. And it’s justified. My time, resources, and brainpower that used to be dedicated to Meta are now dedicated to the truth. Meta’s open disregard for the safety of kids and their toxic pattern of silencing women, usually mothers, who speak up about safety concerns, is not acceptable, and I won’t stop until they’re held to account.

I know I’m not the first and I know there will be so many more to come after me. These companies were built brick by deceptive brick and stand like a giant Jenga tower.

Every employee who tells the truth and every parent who shares their story, pulls out a piece. I don’t know how many more pieces it will take, but I know the tower will fall.

I have so much respect for all of you. Your kids won the parent lottery, because I could not imagine a more profound expression of love than the way you’re fighting for your children.

And all of our children.

And now, I believe in that love more than I believe in anything else.

Meet at the Jenga Tower

“Every employee who tells the truth and every parent who shares their story, pulls out a piece. I don’t know how many more pieces it will take, but I know the tower will fall.”

Current and former tech workers possess a unique combination of technical expertise, historical context, and firsthand understanding, positioning them as powerful and credible advocates for KOSA and the ASAA, able to illuminate precisely how current practices put children at risk and why legislative intervention is urgently needed.

How To Get Involved

Educate yourself on the scale of Big Tech’s lobbying and its war on accountability. These are the same companies that insist they care about child safety while quietly dismantling trust and safety teams and gutting enforcement.

Watch Can’t Look Away. This documentary chronicles the lives of families shattered by social media harms—and the legal efforts trying to hold companies accountable.

Read “The Tech Exit” by Clare Morell. It lays bare the neurological and developmental harms of smartphones and algorithmic feeds on children and teens.

Speak out if you’ve worked in these systems. Testify. Write. Contact lawmakers. Clarify the spin.

Support legislation like KOSA and the App Store Accountability Act. Call your representatives. Show up at town halls. Share your story. These aren't partisan efforts—they're about whether children deserve basic common sense safety protections online.

Interesting paper here with a lot to unpack, building on / overlapping with some of your points above, Kelly. https://link.springer.com/article/10.1007/s43681-021-00068-x#Sec12